Introduction

Greetings to all the Battle Nations enthusiasts and fellow devs out there! For those who might not be familiar, my name is Boris, and I had the privilege of working on the original Battle Nations iPhone app 12 years ago. It was a sad moment when the original game shut down, but I’m extremely excited to be part of this remake journey!

These developer diaries are primarily for other software engineers and will document the technical journey including challenges, trade-offs, lessons learned, and other technical topics. I personally will be focusing on the client architecture, which is being developed in the Unity engine.

A Trip Down Memory Lane – The ECS Architecture

Diving deep into the tech core, the Battle Nations app was originally developed in iOS and employed an in-house Entity Component System (ECS) architecture. This was revolutionary at its time, especially for a mobile-native game. It provided us with flexibility and scalability – elements that were paramount for a complex game like Battle Nations.

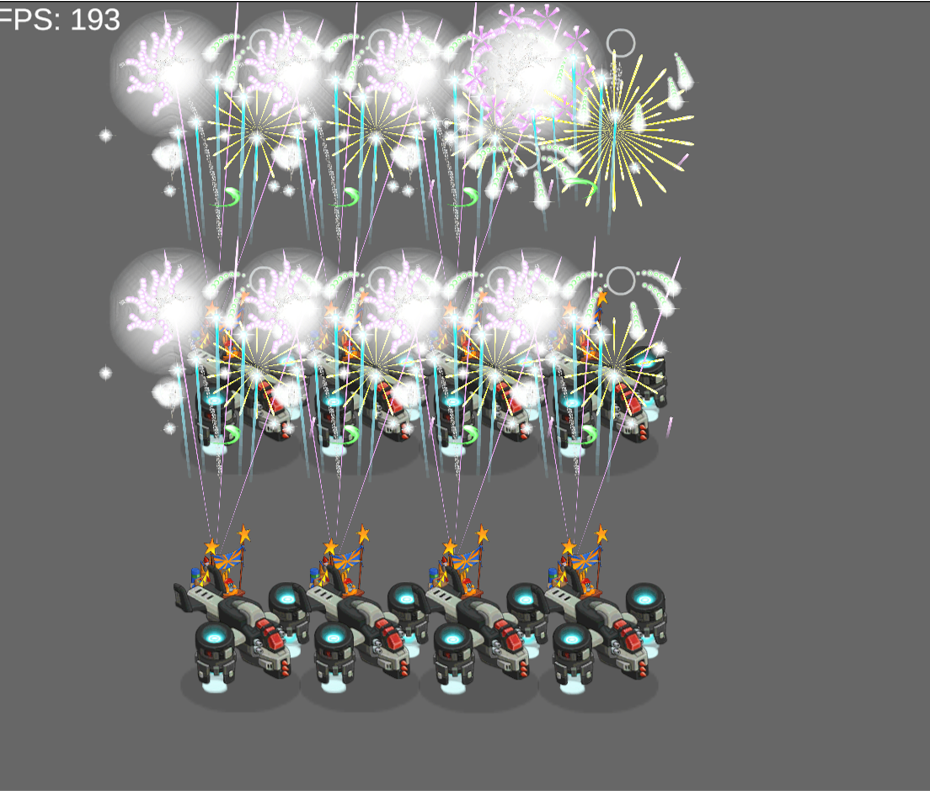

The animation system in particular needed to be extremely performant. Players were able to build a great many buildings in their base, each with potentially many frames of animation. So the animation format was designed as a necessity to be able to render so many animations at once.

Unity ECS – The Evolution

Unity provides a very powerful ECS API as part of its Data Oriented Technology Stack (DoTS). It includes a special compiler (Burst) that is able to make unmanaged code very fast. It also comes with a Jobs API to facilitate scheduling parallel code.

Many of the ideas in the original BN code can be ported fairly directly into the Unity implementation. However, animation poses some unique challenges that require extra attention.

Data Format

The animation format from the original game is being used with minimal changes. It consists of animations (also called timelines) grouped into timeline config files. Each file corresponds to a sprite sheet texture. This means that all animation timelines in the same file use the same texture.

Each animation can have multiple frames but only display one frame at a time. Each frame can have multiple quads that are cut-outs of the corresponding sprite sheet. Each quad also comes with its own affine transform. I call this combination of a quad and its transform a subframe. All subframes within a frame need to be rendered simultaneously, in order.

Leveraging ECS for Animation

In both the original and the remake, everything in your base is an Entity with various Components. This includes an animation component that stores the current frame. However, the original game used OpenGL to render the animations to the screen, whereas the remake will use Entities Graphics on top of URP. Entities Graphics is an API that allows rendering from ECS. URP is Unity’s Universal Rendering Pipeline, which works well for mobile releases.

When an entity (such as a building) is created, the relevant animation components are added, including the ECS-specific components required for rendering. Then, for every frame, the RenderSystem schedules jobs to calculate the new frame number for all the animations, and the new vertices and UV coordinates for the current frame of all animations.

Challenges

There were many challenges in getting these animations to render correctly in Unity.

- There are 501 timeline config files, and each one can be very large, up to several megabytes. We can use Addressables to load these quickly, but parsing the JSON takes too long. It’s also not feasible to load all 501 textures at load time. So, we need code to load the correct config and texture on demand at runtime asynchronously.

- Using unmanaged code in Jobs is challenging. It means all the types accessed within a job need to be blittable. In other words, they need to be value types that only hold value types. It also means types can’t hold collections with unknown sizes.

- In Unity, Mesh is a class, and classes can’t be blittable since they are reference types. This means we need a blittable intermediate animation output struct that holds the vertices and UV coordinates for each subframe.

- The timeline config data isn’t blittable by default, since each timeline can have any number of frames, and each frame can have any number of subframes. To solve this, we only store subframes as blittable structs, along with their frame and timeline IDs. They are stored in a NativeParallelMultiHashMap, grouped by a combined index made from the animation and frame indices. This data structure is accessible by Jobs since it’s Native.

- Z-ordering many transparent animations correctly took some effort. Simply setting the z coordinate of the entity’s position doesn’t do anything, since transparency requires the objects to be rendered in a specific order, back-to-front. The final fix required setting individual materials for each entity with their own render queue number, which is calculated from their position.

- It’s extremely important that we’re able to render many animations at once, without lag. To support this, we:

- Only update the mesh if the frame number changes. Animations are rendered at 30 fps and don’t need to change every iteration of the update loop, which happens at a much higher frame rate.

- Avoid sorting subframe arrays by allocating a single array with a large capacity, and simply setting the correct index. Each subframe already has its sort index from the config. This array can then be reused for all animations.

- Use IBufferElementData for animation outputs, to associate subframes with entities. This is much faster than using a multi-hash map.

Next Steps

Now that we’re able to display animations, we need to test on various devices and optimize the system. Later, we’ll need to integrate the animation system with other systems and make sure it works well with them.

Gallery of Progress